I am a Research Engineer at Meta Reality Labs. I earned my master’s degree in Computer Vision at Carnegie Mellon University. Previously, I completed my bachelor’s degree in Computer Science and Technology at Zhejiang University, advised by Prof. Hongzhi Wu.

My interest includes Computer Graphics and 3D Vision.

📖 Education

Carnegie Mellon University, Pittsburgh, U.S. Sep 2023 - Dec 2024

- Program: Master of Science in Computer Vision

- Cumulative QPA: 4.0/4.0

Zhejiang University, Hangzhou, China Sep 2019 - Jun 2023

- Degree: Bachelor of Engineering

- Honors degree from Chu Kochen Honors College

- Major: Computer Science and Technology

- Overall GPA: 94.6/100 3.98/4

- Ranking: 1/125

💻 Experience

Meta, Pittsburgh, U.S. Jan 2025 - Present

- Position: Research Engineer

ByteDance, San Jose, U.S. May 2024 - Aug 2024

- Position: AR Effect Engineer Intern

Microsoft Research Asia, Beijing, China Mar 2022.3 - Jun 2023

- Position: Research Intern of Internet Graphics group

- Advisor: Dr. Yizhong Zhang, Dr. Yang Liu

📝 Projects

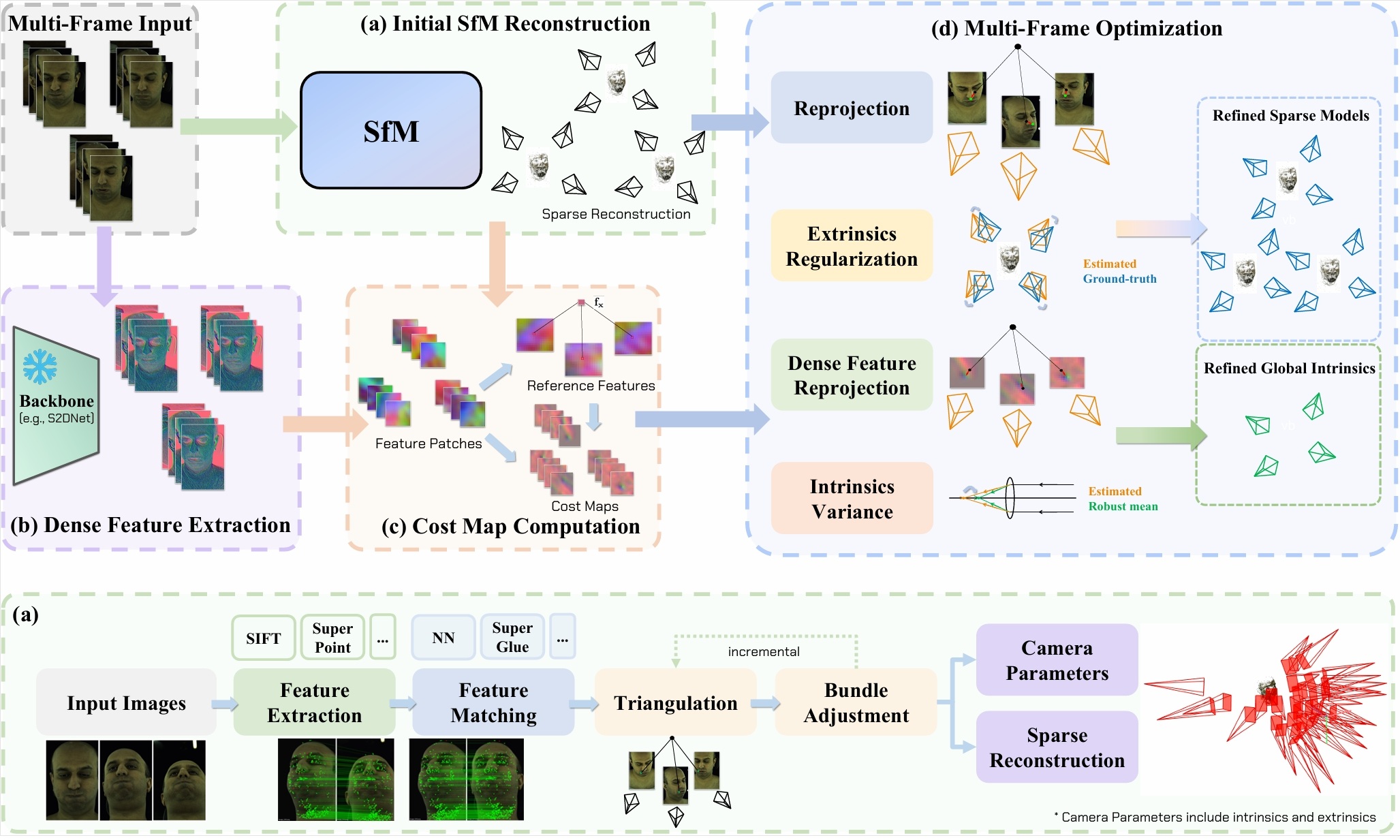

Multi-Cali Anything [Project Page] [Paper] [Code] Sep 2024 - Dec 2024

Research Project at CMU; Accepted at IROS 2025

Calibrating large-scale camera arrays, such as those in dome-based setups, is time-intensive and typically requires dedicated captures of known patterns. We propose a dense-feature-driven multi-frame calibration method that refines intrinsics directly from scene data, eliminating the necessity for additional calibration captures. Our approach enhances traditional Structure-from-Motion (SfM) pipelines by introducing an extrinsics regularization term to progressively align estimated extrinsics with ground-truth values, a dense feature reprojection term to reduce keypoint errors by minimizing reprojection loss in the feature space, and an intrinsics variance term for joint optimization across multiple frames. Experiments on the Multiface dataset show that our method achieves nearly the same precision as dedicated calibration processes, and significantly enhances intrinsics and 3D reconstruction accuracy. Fully compatible with existing SfM pipelines, our method provides an efficient and practical plug-and-play solution for large-scale camera setups.

Optimizing and designing features for Effect House's Visual Effects system May 2024 - Aug 2024

Internship Project at ByteDance

In this internship project, I optimized the particle attribute buffer in Effect House's Visual Effects (VFX) system, which saved more than 50% memory for most template VFX effects in Effect House. Besides, I implemented simulation node in VFX graph editor which allows users to use the VFX system as a general compute pipeline, apart from a GPU particle system. The users can use simulation node to achieve custom physics simulation effects. Finally, I implemented a 3D Gaussian Splatting output node to render 3D scenes using VFX particles.

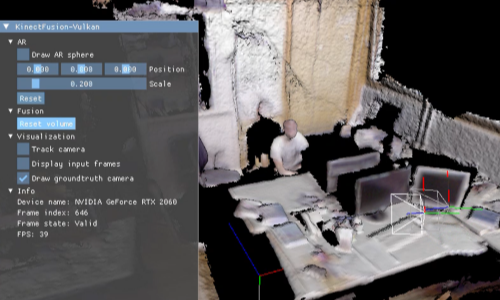

KinectFusion - Vulkan [Project Page] Mar 2024 - Apr 2024

Course Project of Robot Localization and Mapping (16-833)

In this project, I implemented KinectFusion based on Vulkan. Different from CUDA, Vulkan is a cross-platform graphics API that supports both graphics rendering and parallel computing. Therefore, my implementation is cross-platform and supports real-time camera tracking, scene reconstruction, and graphics rendering at the same time. The estimated camera poses can also be used to render AR objects onto the input RGB images to achieve AR effects.

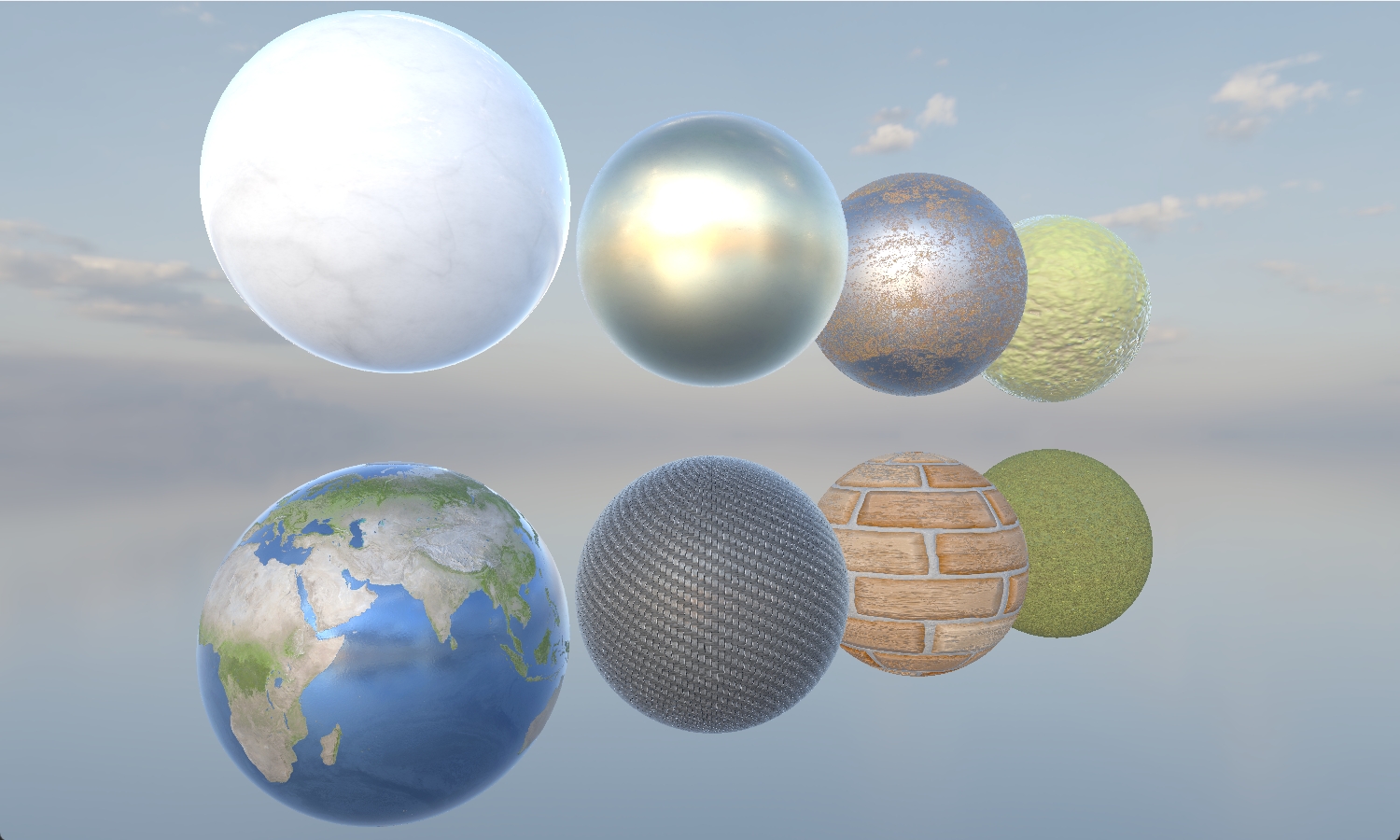

Render72: A real-time renderer based on Vulkan [Project Page] Jan 2024 - Apr 2024

Course Project of Real-Time Graphics (15-472)

I developed a real-time renderer based on Vulkan. It supports multiple material types like mirror, lambertian, and pbr. The scene can have an environment map that can be used for image-based lighting by precomputing radiance/irradiance lookup tables. The renderer also supports analytical lighting with shadow mapping (perspective / omnidirectional / cascade). It also supports deferred shading and screen space ambient occlusion (SSAO).

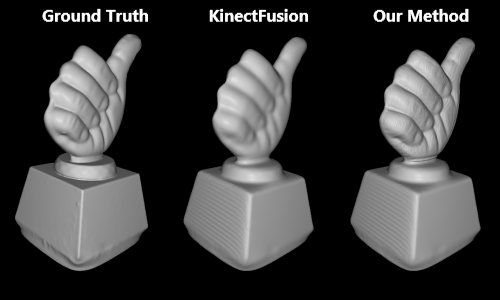

Anti-Blur Depth Fusion based on Vision Cone Model Nov 2022 - Jun 2023

Research Project at Microsoft Research Asia

We proposed a depth fusion method to fuse low-resolution depth images while still maintaining high resolution information in the global model. Traditional methods like KinectFusion optimize the reconstruction by minimizing the difference between the reconstruction depths and captured depths. Therefore, they may produce blurred or aliased reconstructions when the image resolution is low. Our method is based on the assumption that the captured depth of a pixel equals to the average of actual depths within the pixel's vision cone. We designed loss functions to minimize difference between the average of reconstruction depths and captured depth. We have tested our method on both SDF voxel and mesh representations and got better reconstruction results than KinectFusion.

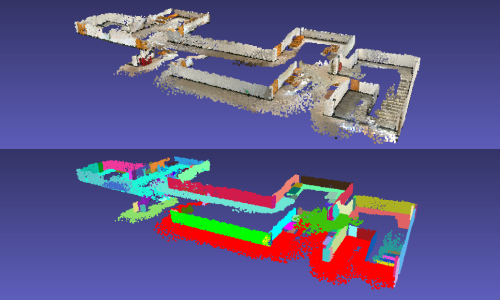

Real-Time SLAM System based on ARKit Framework Mar 2022 - Oct 2022

Research Project at Microsoft Research Asia

We developed a SLAM system for real-time tracking of camera trajectory when scanning indoor scenes with rich planar structures, using only an IOS device like iPhone or iPad. Our system gets the RGB-D data from the LiDAR camera, along with estimated camera poses computed by ARKit framework. It then searches for coplanar and parallel planes in the scene and uses them to optimize camera poses. Meanwhile, it uses a vocabulary tree and a confusion map to detect loops globally. Additionally, it allows users to confirm detected loops via the UI to improve the precision. Also, to avoid memory overflow in long time scan, it uses an embedded database to store infrequently visited data. Experiments show that our method improves the performance of camera localization and loop detection algorithms of ARKit. It allows users to scan large indoor scenes while still runs at real-time frame rate to give feedback to users.

🔧 Skills

- Programming Language: C/C++, Python, JavaScript, Swift, Objective-C, Verilog

- Tool&Framework: Vulkan, OpenGL, Metal, OpenCV, CUDA, PyTorch, NumPy, Doxygen, CMake